If you maintain a website utilizing a self-hosted WordPress setup and have Cloudflare CDN and LiteSpeed Cache plugin, you will want to read this post.

When managing a WordPress website with dyamic content, optimizing page load times is crucial for user experience and SEO. The caching settings in Cloudflare play a significant role in this optimization, particularly for sites using WordPress with plugins like LiteSpeed Cache and integrating Cloudflare for additional performance and security benefits.

Cloudflare

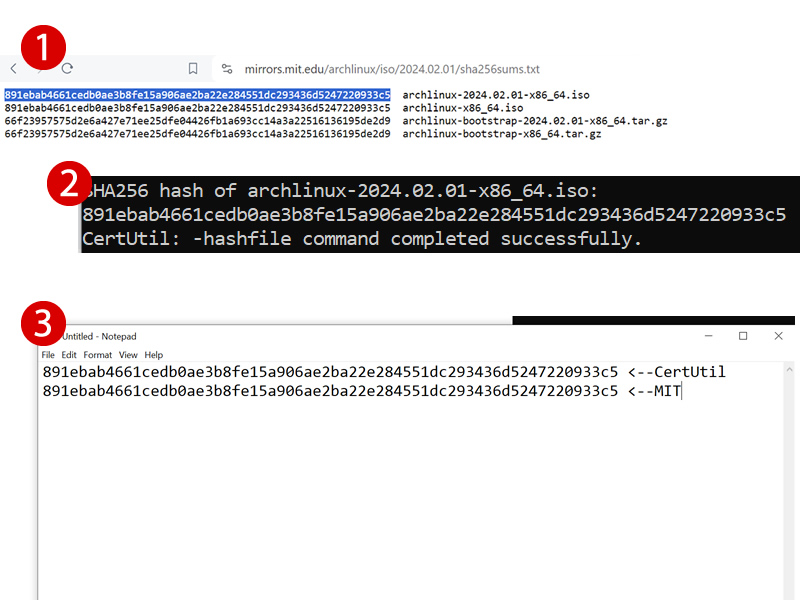

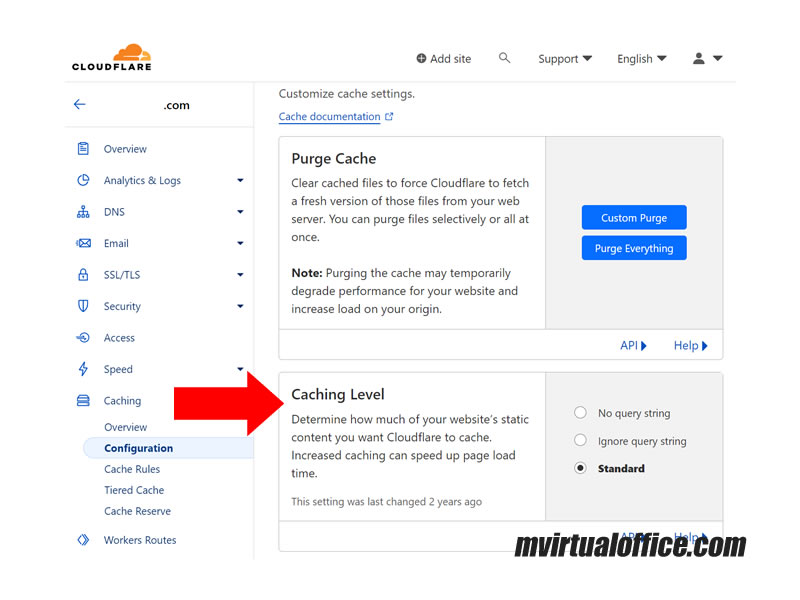

A notable feature within Cloudflare’s settings is the “Caching Level,” aimed at managing the extent of static content caching on your website. Enhanced caching can significantly improve page load times. There are three settings available for this feature:

- No Query String

- Ignore Query String

- Standard

Let’s review which are essential for understanding how your website gets cached and served to your visitors.

No Query String

This option tells Cloudflare to cache your website’s static content (like images, CSS, and JavaScript files) but only for URLs without any query strings. A query string is a part of a URL that comes after a question mark (?) and is used to pass additional parameters. For example, https://example.com/photo.jpg?width=300 has a query string that specifies the width of an image.

It’s most useful for sites where the content of the static files does not change based on query strings. If your baseball site’s static resources don’t vary with different query parameters, this setting can help cache more aggressively.

Ignore Query String

With this setting, Cloudflare caches the static content without considering the query strings at all. This means that a request for https://example.com/photo.jpg?width=300 is treated the same as a request for https://example.com/photo.jpg. Cloudflare serves the same cached content for both URLs.

This option is suitable if your website’s response for a static file doesn’t depend on the query string parameters. For instance, if the image’s width on your baseball site is controlled by CSS rather than the query string, this option could reduce the number of cache misses and speed up the delivery of content.

Standard

This is the default setting and a middle ground between the two extremes. Cloudflare will cache static content based on the combination of the URL and the query string. Different query strings will result in different cached versions of the same URL.

This option is ideal for websites where the content might change based on query string parameters. For a baseball site, if you have dynamic content that changes based on query parameters (like sorting order of player statistics), this setting ensures that users see the correct version of your content.

Cloudflare and Litespeed Integration

When integrating Cloudflare with the LiteSpeed Cache plugin for a WordPress site, ensuring compatibility and optimizing the settings for both services are essential to maximize your website’s performance and SEO.

Here are specific settings and considerations to keep in mind to ensure Cloudflare and LiteSpeed work harmoniously:

Cache Purging

- LiteSpeed Configuration: Ensure that LiteSpeed is set to automatically purge cached content when it’s updated. This includes posts, pages, and other dynamic content that changes.

- Cloudflare Configuration: Similarly, configure Cloudflare to purge cached versions of your website when updates are made. You can automate this process by using Cloudflare’s API in conjunction with the LiteSpeed plugin or WordPress hooks.

Cache Exclusion Rules

Both Cloudflare and LiteSpeed allow you to exclude specific URLs or types of content from being cached. It’s important to harmonize these settings to avoid caching dynamic content that should be served fresh (e.g., user profiles or custom responses based on cookies).

CDN Settings

If you’re using Cloudflare as a CDN, ensure that the LiteSpeed Cache settings are correctly configured to acknowledge Cloudflare’s presence. This often means disabling CDN features within LiteSpeed to prevent conflicts or double caching issues.

Optimization Features

LiteSpeed offers various optimization features (such as image optimization, CSS/JS minification, and lazy loading). When these features are enabled in LiteSpeed, ensure that similar settings are not redundantly enabled in Cloudflare, as this can lead to issues such as broken pages or styles.

Browser Cache TTL

Both Cloudflare and LiteSpeed allow you to set Browser Cache TTL (Time To Live), which controls how long the content is cached in the visitor’s browser. Make sure these settings are aligned to prevent caching issues or stale content.

WebP Compatibility

If you’re using LiteSpeed’s feature to serve WebP images for supported browsers, ensure Cloudflare’s “Polish” feature (if used) is configured to respect WebP versions or to avoid converting images that have already been optimized by LiteSpeed.

Edge Caching

LiteSpeed’s Edge Cache feature can serve content directly from the edge server network. If you’re using Cloudflare, ensure that the configurations between Cloudflare’s caching and LiteSpeed’s Edge Caching do not conflict, especially regarding cache purging and TTL settings.

Testing and Monitoring

After configuring both Cloudflare and LiteSpeed Cache settings, it’s crucial to test your website’s performance and monitor for any issues. Tools like GTmetrix, Google PageSpeed Insights, or Cloudflare’s analytics can provide insights into how effectively your content is being cached and delivered.

Documentation and Support

Both LiteSpeed and Cloudflare offer extensive documentation and support forums. If you encounter specific issues or need advice on settings, referring to the official documentation or reaching out to support can provide tailored advice for your website’s configuration.

By paying attention to these settings and considerations, you can ensure that Cloudflare and LiteSpeed Cache work together efficiently, improving your WordPress site’s speed, performance, and SEO.

Choosing the Right Option

When it comes to Cloudflare’s caching level, the standard setting is generally the safest choice, ensuring dynamic content changes are respected while still benefiting from caching.

If you’re certain that your site’s static content doesn’t vary with query parameters, or if such variations don’t impact user experience, Ignore Query String could potentially increase cache hits and performance.

No Query String is less commonly used for dynamic sites but could be relevant in specific scenarios where query strings are entirely irrelevant for static resources.

When using Cloudflare in conjunction with other caching mechanisms (like LiteSpeed Cache), ensure your settings are harmonized across platforms to prevent caching conflicts that might affect your site’s performance or behavior. It’s also important to monitor your site’s performance and adjust these settings as necessary to find the optimal configuration for your specific needs and traffic patterns.