When you are working in the search engine optimization field, sometimes you need to scan the source page where inbound links are originating from.

In many cases, there is no quick and easy way of scanning a page without digging through HTML.

Instead, there are several free tools and methods available to scan a webpage for specific links or domain names. These tools can be useful for various purposes, including SEO audits, link verification, or competitor analysis. Here are a few approaches you can take:

Online Tools

- Broken Link Checkers: While primarily used to find broken links, some broken link checking tools also allow you to see all outbound links from a webpage. Tools like Dead Link Checker, BrokenLinkCheck, and Dr. Link Check can scan a webpage and list all the links found on that page.

- SEO Audit Tools: Some free SEO tools and website audit tools provide a feature to list out external links found on your webpage. Tools like Ubersuggest, MozBar (a Chrome extension), and SEMrush offer limited free usage that can be utilized to check for external domain links.

Browser Extensions

- Link Highlighting Extensions: Browser extensions like “Check My Links” or “Link Miner” can highlight all the links on a webpage. Some of these tools have the option to filter or search through the displayed links, making it easier to spot specific domain names.

- Developer Tools: Most modern browsers come with built-in developer tools that can be used to inspect the page and search through its elements (including links). While not a dedicated link checker, it’s a quick way to find specific links on a page you’re currently viewing.

Using a Custom Script

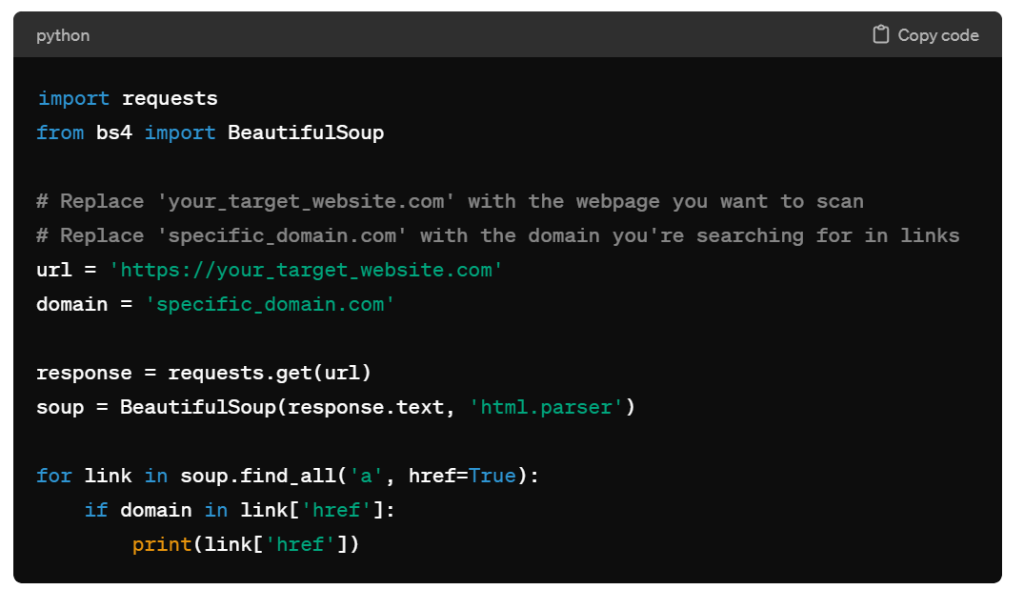

For more tech-savvy users, writing a small script in languages like Python can provide the most flexibility. Using libraries such as BeautifulSoup for Python, you can create a script that fetches a webpage, parses its HTML, and searches for links containing a specific domain. This method requires some programming knowledge but is highly customizable to your needs.

Example Python Script

Here’s a basic example of how you might use Python to find links to a specific domain on a webpage:

import requests

from bs4 import BeautifulSoup

# Replace 'your_target_website.com' with the webpage you want to scan

# Replace 'specific_domain.com' with the domain you're searching for in links

url = 'https://your_target_website.com'

domain = 'specific_domain.com'

response = requests.get(url)

soup = BeautifulSoup(response.text, 'html.parser')

for link in soup.find_all('a', href=True):

if domain in link['href']:

print(link['href'])

This script will print out all links on ‘your_target_website.com’ that include ‘specific_domain.com’. You can adjust the url and domain variables as needed.

Each of these methods has its pros and cons, depending on your specific needs and technical comfort level. Online tools and browser extensions are user-friendly and quick for spot checks, while custom scripts offer more control and flexibility for advanced users or specific requirements.